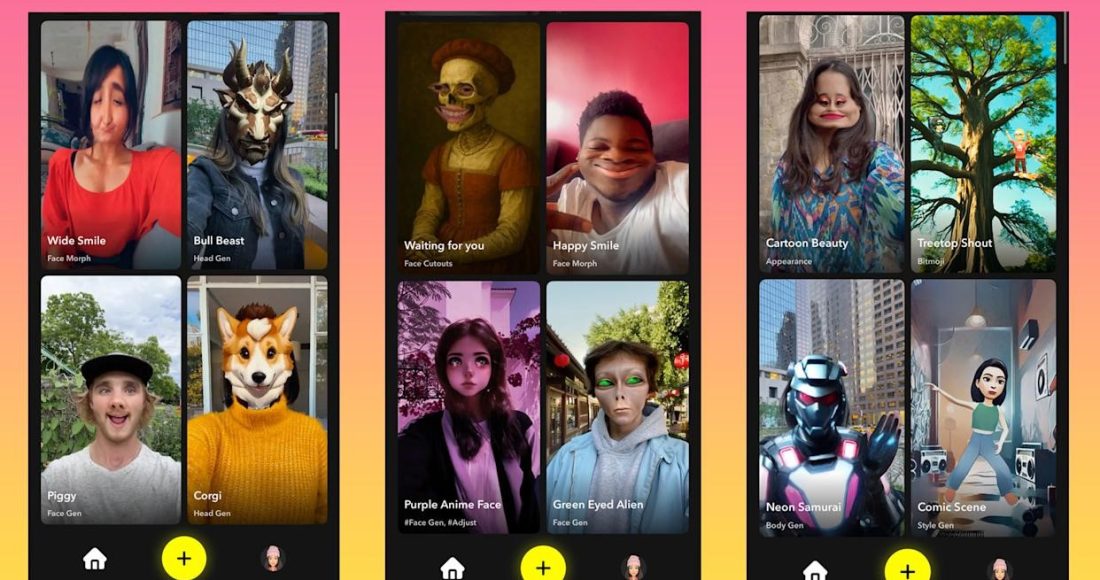

For the past several years, Snapchat has steadily integrated generative AI into its augmented reality (AR) lenses, offering users increasingly sophisticated and dynamic filters. Now, the company is taking a major step toward democratizing AR effect creation. With the launch of a new standalone mobile application, Snap is empowering all users—not just developers—to design, generate, and share their own AR lenses using intuitive AI tools and simple text prompts.

From Pro Tool to Playground: Lens Studio Goes Mobile

Previously, creating AR lenses for Snapchat was limited to a desktop software called Lens Studio, which targeted professional developers and AR artists with advanced capabilities. While powerful, it required technical skill and was inaccessible to the average user.

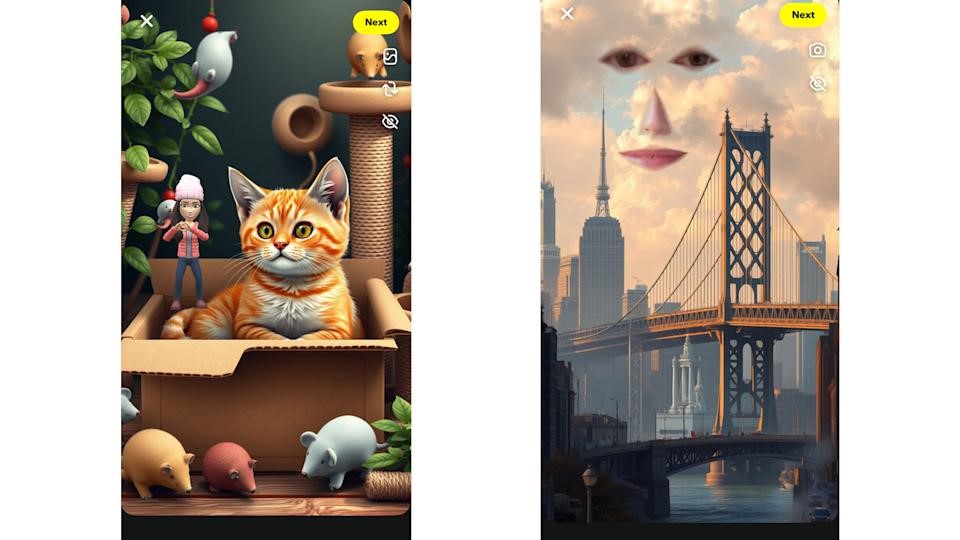

Snap’s newly introduced iOS app and web version of Lens Studio change that entirely. Though less technically complex than the desktop edition, the new platform leverages generative AI to allow everyday users to produce creative face, body, and environment-altering lenses. By describing an idea through text, anyone can now generate custom AR experiences without any prior 3D modeling or coding knowledge.

According to Snap, these are “experimental new tools that make it easier than ever to create, publish, and play with Snapchat Lenses made by you.” The shift signals a strategic effort to greatly expand the universe of creators and deepen engagement through personalized content.

How It Works: AI-Prompted AR in Minutes

The new Lens Studio app offers several pathways to create lenses:

-

Text-to-AR Generation: Users can type descriptive prompts—such as “zombie head with oversized eyes and intricate skin details”—and the AI will generate a lens based on the description. The app even suggests effective prompt phrases to guide newcomers.

-

Pre-Built Templates: For those who want to start quickly, dozens of customizable templates are available. Users can remix these with their own text or visual adjustments.

-

Bitmoji and Classic Effects: The app also supports integrating personalized Bitmoji avatars and Snapchat’s classic visual tricks, like face cutouts and dynamic animations, enabling playful hybrid creations.

There is a learning curve, however. Some complex AI generations can take up to 20 minutes to render. And not every prompt delivers perfect results on the first try—experimentation is part of the process.

Opening Doors for Creators and Potentially New Revenue

Snapchat already boasts a community of hundreds of thousands of lens creators, many of whom have built effects for years. But by simplifying the creation process, Snap is likely to attract a much broader audience—from casual users to aspiring designers.

Notably, lenses published through the new app are eligible for Snap’s Lens Creator Rewards program, which distributes payments to creators whose effects gain widespread use. This creates a tangible incentive for high-quality, popular content even from non-technical creators.

Strategic Positioning in the AR Ecosystem

This move also strengthens Snap’s position in the competitive AR landscape, particularly as Meta discontinued Spark AR last year—its platform for Instagram AR effects. By lowering the barrier to entry, Snap may attract displaced creators and reinforce its role as a leader in social AR.

Furthermore, Snap continues to invest in hardware with its next-generation AR glasses. While professional developers are still essential for building advanced glasses-compatible experiences, this new wave of user-generated lenses could fuel creative experimentation and uncover new use cases for wearable AR.

Conclusion: A Playful Step Toward the Future of AR

Snap’s new Lens Studio app isn’t just a tool—it’s an invitation. By merging generative AI with augmented reality, Snap is allowing millions of users to become creators themselves. While the technology is still evolving and some delays in rendering remain, the ability to imagine a lens and bring it to life through words marks a significant leap toward more open, expressive, and inclusive AR ecosystems.